WARP Project Forums - Wireless Open-Access Research Platform

You are not logged in.

Pages: 1

- Index

- » General Project Questions

- » 802.11 refernce design: time difference between DATA and ACK.

#1 2019-Apr-19 14:31:07

- Yan Wang

- Member

- Registered: 2018-Oct-25

- Posts: 21

802.11 refernce design: time difference between DATA and ACK.

Hello,

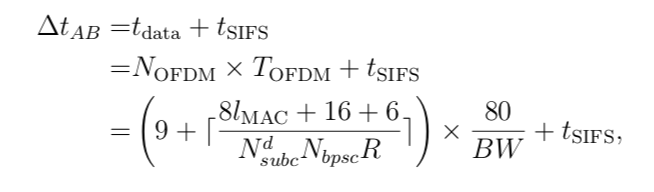

I have a question about the time difference between DATA and ACK packets. I set the two WARP boards with payload=20 bytes,PHY_MODE='HTMF', and calculate the theoretical time difference according to the equation which can be seen in the following figure.

.

.

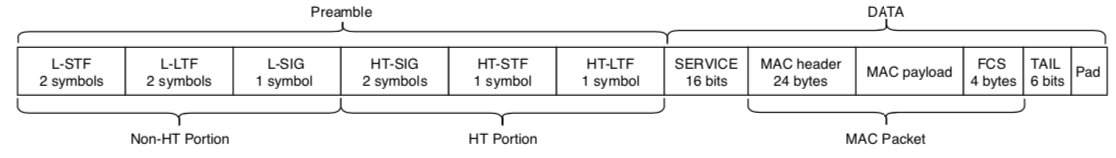

The equation is obtained according to the IEEE 802.11 OFDM physical layer packet.

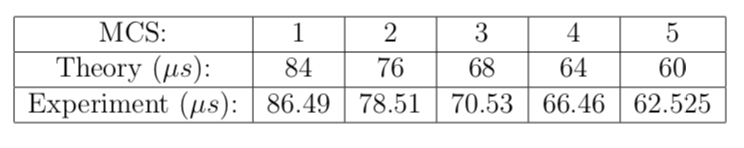

I have calculated the time difference with different MCS values and also measure the time difference in the experiment as can be seen in the following figure.

From the results, I found that the experiment results usually 2.5 us larger than the theory results. Thus, I wonder why that happened? What is the meaning of 2.5 us? Looking forward to your reply.

Best wishes,

Yan

Offline

#2 2019-Apr-22 10:16:48

- chunter

- Administrator

- From: Mango Communications

- Registered: 2006-Aug-24

- Posts: 1212

Re: 802.11 refernce design: time difference between DATA and ACK.

I have a couple of follow-up questions:

- What version of the 802.11 Reference Design are you using to run the experiments? IFS values were re-calibrated in the 1.7.6 release.

- How are you measuring the time difference using the log? Is the measurement using the TX_LOW timestamp of the DATA and RX_OFDM timestamp of the corresponding ACK all on the same node? Or is this from the perspective of a third monitor node only looking at RX_OFDM timestamps of both the DATA and ACK exchanged? The latter approach is the easiest since you don't have to worry about accounting for Tx/Rx hardware latencies.

Offline

#3 2019-Apr-24 04:51:34

- Yan Wang

- Member

- Registered: 2018-Oct-25

- Posts: 21

Re: 802.11 refernce design: time difference between DATA and ACK.

Hello,

I use the latest version which is 1.7.8 release to run the experiment.

I measured the values by using RX_OFDM timestamp of the DATA on WARP node which is configured as STA and RX_OFDM timestamp of the corresponding ACK on AP node. Thus, is this because of the hardware latencies?

Best Regards,

Yan

Offline

#4 2019-Apr-24 10:39:25

- chunter

- Administrator

- From: Mango Communications

- Registered: 2006-Aug-24

- Posts: 1212

Re: 802.11 refernce design: time difference between DATA and ACK.

There are pros and cons to whatever strategy you choose:

(1) What you’re describing is comparing the timestamps present in two different logs across two different nodes. The advantage of this is that you shouldn’t have to worry about the relative latencies between Tx and Rx. The disadvantage of this is that you do have to worry about making sure the two log files have similar timebases since both nodes have independent clocks dictating their respective MAC times. In your experiment, is your AP sending beacons at the typical 100TU interval? If so, that should keep the MAC time at the STA from drifting more than a 1 us within a beacon interval given typical oscillator precisions.

(2) An alternative experiment would be to look at the TX_LOW timestamp of the DATA and compare it to the RX_OFDM timestamp of the ACK all within a single log file. This avoids the problem of MAC time changes between independent nodes, but it introduces the problem of needing to compensate for latencies between Tx and Rx. There are two important values for this:

- First, inherent to the 802.11 protocol and our PHY architecture is the time it takes to assert the RX START event (which is when the RX_OFDM timestamp is latched) after the first sample of an Rx waveform enters the baseband. This number is 25 us (gl_mac_timing_values.t_phy_rx_start_dly in the DCF code).

- Second, inherent to the WARP v3, are hardware latencies. We calibrated these values with our own measurements and you can see them here. Those values are used to “tweak” the IFS settings in the node. Specifically, the post-Tx DIFS is set to a value smaller than a standard DIFS by (tx_analog_latency+tx_radio_prep_latency). We effectively start transmitting a little early such that the first sample leaves the radio right on a DIFS boundary. The SIFS is adjusted by the same amount for the same reason. Note: the Rx latency is already compensated for by the fact that the post-Rx SIFS timer begins after a calibrated Rx extension. These hardware latencies are used such that the node realizes the correct behavior specified in the standard, but they are not used to tweak timestamps present in logs. So if you want to compare a Tx and Rx timestamp in the log, you'll have to account for these values.

(3) Another alternative is to let a third node receive everything and look at the RX_OFDM of the DATA and the RX_OFDM of the ACK all in a single log. This avoids all of the disadvantages above. The only downside is that you need to run a third node.

I suggest running the experiments (2) and (3) above to see if you confirm the 2.5 us offset you reported earlier. We’ll double check our timings before the next release, but in the meantime you find there is a consistent offset then you can fix it yourself with a simple change to the C code. Going back to these lines, subtracting another 25 from those values should reduce the realized SIFS by an extra 2.5 usec.

Offline

Pages: 1

- Index

- » General Project Questions

- » 802.11 refernce design: time difference between DATA and ACK.